Pattern recognition is, arguably, not exactly the full definition of intelligence, but it is neat. And, importantly, as clever as a thing that writes “poetry in the style of Shakespeare”, and “makes images in the style of DaVinci” already is, we're just getting started with the recognition of patterns.

Us humans have always used patterns without thinking much about it – to choose a mate, find food, build things, decide if a situation is safe… Humans have an astonishing ability to recognise patterns. But we’re not actually here to talk about humans today. Because, as good as we are at it, it turns out we’re pretty inadequate compared to the competition – primarily due to our ‘onboard storage’.

It would have taken a pretty aggressive media diet to have missed the dawn of mainstream generative AI in the past little while. From Chat-GPT to Midjourney to Deep Fakes. To the level that, now, “AI” is already well-entrenched in our cultural dialogue, and continuing to move at such a clipping pace so that anything specific I write here would already be hilariously out of date by the time you’re reading it.

So, what I want to do is think less about the implications of AI, and more about what AI actually represents, over the next few newsletters. And, because it’s the edge where most of us are exposed to AI right now, that means starting with Generative AI – basically the fancy-pants prediction engines that are driving the equal-parts-creativity/equal-parts-panic in the zeitgeist right now.

Of course, some level of ‘predictive AI’ has existed for decades.

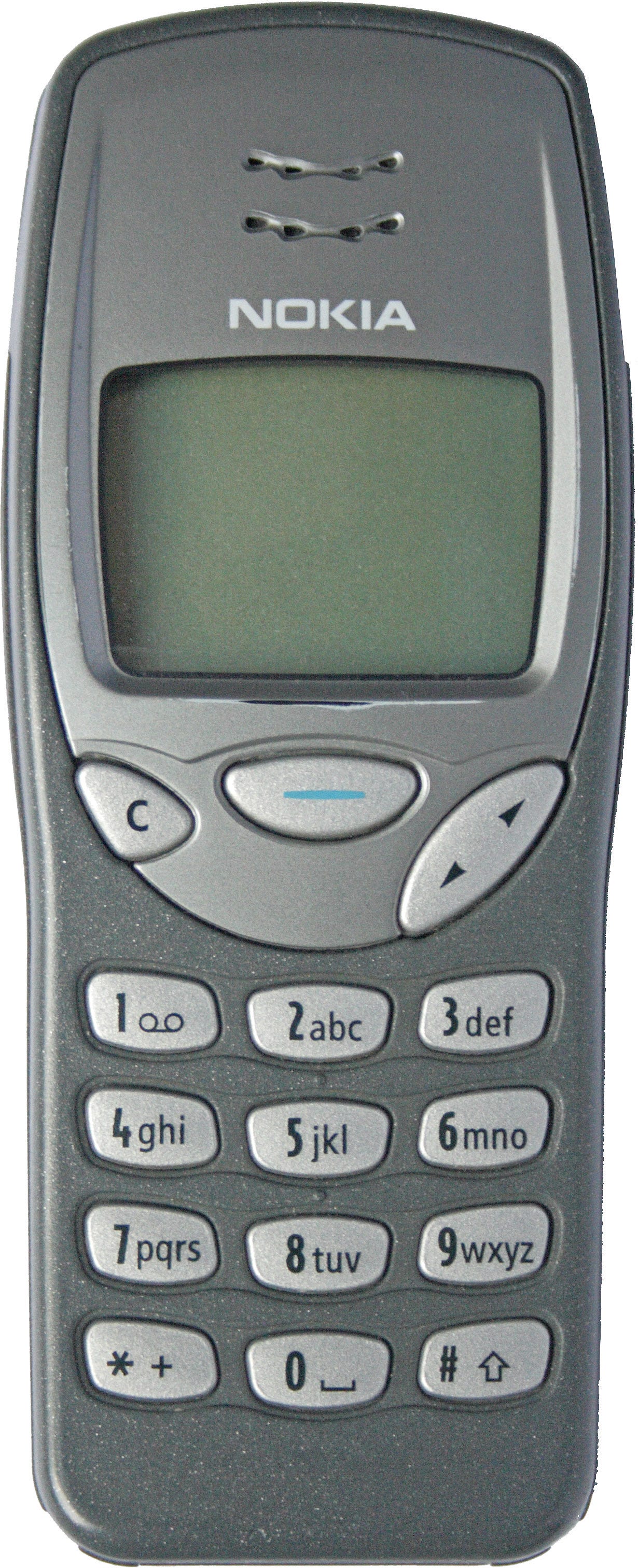

I had several mobile phones in the 1990s, including the one below, which popularised a feature called T9 Predictive Text.

Kids these days will have no idea how hard we had it but, back then, we needed to press each key multiple times to select the letter we wanted to use, to spell out words for text messages. What T9 did was predict the word you wanted to write, based on pressing each key just once. So, if you wanted to write “text”, you could just tap 8-3-9-8 (rather than 8-3-3-9-9-9-8). The phone simply referenced a dictionary to determine what word you were most likely trying to write with those numbers; updating the guesses with each successive button push.

So, the idea of taking a relatively big data set – in this case, the English Dictionary – and using it to predict the extremely-near future is not at all new. Even in a very mainstream form and product.

Obviously, the datasets used for things like Microsoft Bing and Adobe Firefly are far-far-far larger.

If you’ve done any reading on this, you’ll have come across terms like Large Language Model (LLM). This is basically just that same dictionary, only now appended with millions of extra pages of tightly-spaced-7-point footnotes, referencing how every word interacts with every other word, based on rules and patterns observed across different creative outlets.

This is where the ‘AI’ bit needs to get involved because, obviously, determining what those rules and patterns should be requires the studying of billions of blocks of text, audio, images and so on – a task massively beyond even the greatest polymaths in our species.

This enlarged data set is one reason why the possibilities of AI have, seemingly suddenly, become more sophisticated. This is also the main advantage AI has over us humans at the moment – the storage thing. But two other, actually more important, things have also happened – both related (to the degree that you could argue it’s really just one important thing) – to turn generative AI into the powerful tool it continues to become.

The first is the ability to process natural language – that’s a thing us humans have evolved to be pretty good at (our current ‘misinformation problem’ notwithstanding!); Once we learn a language, we can use our innate pattern recognition abilities to reconstitute meaning (as both an output and for input) from a simple bunch of random sounds, colours, or shapes in a designated order. So that is the thing that has made generative AI useful recently, by reverse-engineered these patterns for us, in the most ‘human’ way possible. So, now, we can write a request (‘prompt’) using the same kind of natural language we speak to each other with and have the LLM understand what it is you’re asking of it.

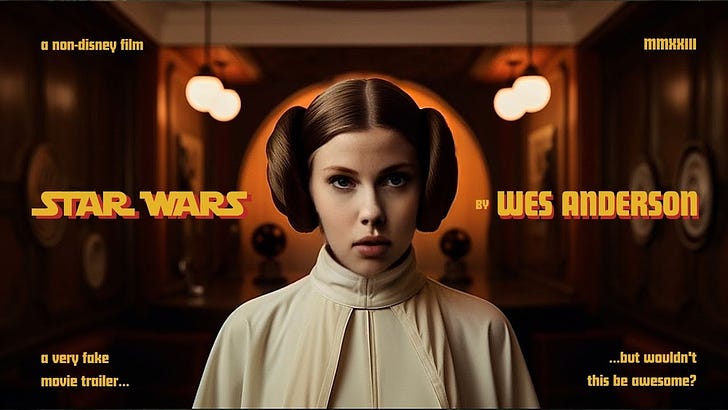

The second, and the one that is set to have the greatest impact on us, is the approach used to build these modern generative AIs; which is to simply convert any data point in any field into numbers. This is the piece in this revolution that is perhaps not so obvious to casual observers. But, if you’ve been paying attention, you’ll have noticed we didn’t just get the ability to generate vulgar poetry in the style of Lord Byron, we also simultaneously got the ability to generate pictures in the style of Rembrandt, voice-overs in the style of James Earl-Jones, music in the style of Beethoven, and architecture in the style of Gehry...

When a human learns to do things like these – like learning the patterns of a language – they learn them one at a time. If you can play a mean beat on the drums, it doesn’t mean you can shape pottery… It doesn’t even mean you can play a bass guitar.

The real innovation with generative AI then is, once it has learnt how to identify patterns in one area, it can master effectively any subject – just using math.

It’s not obvious to us, because our brains simply don’t work like this. But all these advances are based on the same mathematical coding of patterns. Simply convert any data point – a colour, a sound, a word, an electrical signal – into a number; then convert any way of having those numbers interact with each other into a formula.

This belies the obvious complexity of these AI models but, fundamentally, whenever you come across a term like ‘algorithm’, this is all that’s really happening.

What this all means, is the principles on which generative AI have been built with general purpose in mind, in a way that the original designers of all the “general purpose computers” through history couldn’t possibly have dreamed of! And in a way that should concern well-paid accountants, lawyers, software developers and sharebrokers; just as much as it might concern destitute artists.

One quick example. Our brains have proven hard to decipher, but once you can measure the signals from them, the idea of recording dreams for playback the next morning, or tele-communicating with just a thought, are no longer actually far-fetched. It's reasonable to believe, if we can build machines sensitive and durable enough to measure brain activity, a pattern-recognising AI with a bit of training could rebuild our thoughts and present them back to us as literal words and images. We’ve been able to measure the carrier signals of brain activity for some time, but we’ve never had the ability to recognise the patterns inside them in the ways we’ll likely soon be able to.

It's fair to say, this could do a lot of interesting things for the speed that we communicate at.

It could also cause some pretty severe privacy and justice issues. And gather some agonisingly good marketing data for any big companies we chose to allow ‘into’ our brains.

The recognition of a pattern appears to be a simple concept but, applied at overwhelming scale, it takes us from ‘saving a few button presses while text-messaging on a dumbphone’, to recognising disease and identifying cures, reinventing financial systems, creating entirely individualised entertainment, telepathy, and much more.

Suffice to say, it should be clear the path that pattern-recognition AI takes us down is actually unlikely to result in the sorts of anodyne humanoid-helper-bots that science fiction writers have pitched at us over the decades.

Regardless of whether you believe that AI will be mostly good or mostly bad for humanity, it’s certainly going to be bumpy for humanity.

It’s worth pointing out these things can simply be understood as an evolution of our development of computers. A computer has actually never been capable of naturally understanding human language, any more than an earthworm can. There has always been a need to convert a human instruction into machine code – the string of, typically, binary digits that the processor itself can use – and then back again, to deliver the response in a format we can understand.

So, a functional computer – at least until this point – needs to fundamentally be a translation machine before it can be anything else.

And that brings up the question of what a future computer might actually be. Because, we’re already contending with AI ‘black boxes’; parts of systems that are intellectually impenetrable to us, to the extent that we can’t see or understand how or why they identify the patterns they do. At some point these machines will be working with so much data, in such granular ways, that no-one – including their developers – will be able to decipher their output or intent, and the core ‘translation machine’ functionality will be moot.

At some point AI machines will be built that will reframe our relationship with them as the equivalent of an earthworm’s relationship to a human.

So. Consciousness. Next time…

-T